2025年九月的随笔

Neway,好久不见。

在现在落笔之前,我一直在酝酿给Lucas写的信,我想告诉他现在的世界是怎样的,想告诉他爸爸妈妈有多爱他。但忽然某一刻,我认知到,也许给他的信,不用刻意写出来,他成长的每个瞬间的我的陪伴,就是最好的注脚。

但是我必须要和你聊会了。每次当我回过头读那些写给我们的信时,我都能立马捕捉到我在那个当下的情绪甚至画面。因此,在这个发生了太多事的2025年,在这个也许是人生最大节点的时候,我必须写点什么了。

我不知道读这封信的你会在哪里、从事怎样的工作,但我知道你能立刻感受到我现在的迷茫。

从我决定读博的时候,我就坚定的想找教职。最大的原因是因为我喜欢在台上教书、和学生们进行有趣精彩的思维碰撞——我到现在仍是如此,上周Blanton开会去了让我代一节课,对我来说,那也是一次无比有趣、放松的体验。

但是我并不是很卷的人。也许是在媳妇的陪伴下、在Jose的鼓励支持下、我庸庸碌碌但却乐呵乐呵的过了博士这五年,我不觉得牺牲自己的时间精力换取事业上的成功是很明智的一件事:人生有太多值得体验的事物。换句话说,我并不是那么的self-motivated。我不像马哥,zhuolun,Zhengqi,和他们聊的时候,我能明显感觉到他们有野心:这不一定是作为AP成功的充分条件、但一定是必要条件。

正因如此,让我犹豫找教职的另一个原因是,我害怕辜负了我的学生。我作为PI,没有足够的self-motivation,怎么可能可以motivate我的学生 让他们的PhD生涯成果满满、或者至少不用担心毕业前景呢?

另一方面,我觉得自己的high-level的vision很有限,当我只是independent researcher时,我只需要自己捣鼓自己感兴趣的东西。但如果我有需要对他们负责学生,我肯定不能就像现在这样想做啥做啥。因此,倘若我做了AP招了学生,这些忧虑会push我不得不多去和工业界、和前辈交流,不得不每天多看N篇论文,花比现在多得多的时间在research上。那这似乎又和我一直以来的看法冲突了:

我不觉得牺牲自己的时间精力换取事业上的成功是很明智的一件事。

我当然对research有兴趣。事实上,和上课类似,每次paper的讨论都让我很兴奋。那如果这么看,去公司做research scientist,也许才是最适合我的路?不需要对别人负责(或者说,我只需要对manager负责,完成TA对我的expectation)的同时还可以和一群聪明人探索尝试很fancy的东西。

但是你要让我下定决心走工业界,我也会开始犹豫、难断——我甚至会脑补一堆逻辑去推翻我之前的想法:比如学术界获得vision不一定就要导致花比现在多得多的时间在research上:你不需要deal with those messy coding and implementations。比如我的内心并不是害怕对人负责、而是害怕自己的能力不够导致辜负对ta的负责。

哎。

其实当我从旁观者的角度,我可以给自己一些宽慰的建议——譬如不管怎么选,最后结果肯定还不错,所以不用太纠结。但是真的让我自己选择时,我确实没法下定决心:

留美国?美国的政治环境、和父母相隔万里。

回中国?从17岁后就没在大陆长期生活过、大陆的生活、工作环境我习惯吗?一直听说很toxic,如果不习惯怎么办?这大概率是一条单向路。

去工业界?真的要改变自己长久以来的想法吗?

去学术界?之前已经说了。。

其实还有很多想说的,比如对LLM的看法。但是Neway 我要去开会了,那就贴一下之前朋友圈的碎碎念吧。

我第一次lead的project是写一个要开源的版图分解工具。在抓耳挠腮的理解算法、码代码、debug的某个晚上,忽然发现老板十年前的某个formulation有缺陷,导致在某个case结果并不是最优解。

基于此,我顺利publish了第一篇一作ccfa。

之后AI火了,但对我来说 什么cnn gnn只是多了一个工具,可以让我更加得心应手的解决问题。

在这个过程中,我也偶尔会灵光一闪的想出一些自己很喜欢的idea,比如基于SDP的floorplan。

但自从LLM之后,尤其是在做LLM相关work的时候,我发现自己对科研的乐趣正在慢慢消失。我越发觉得自己不再是那个探索者、那个战士,我只是LLM雇佣的一个民工,负责抬着LLM横扫寰宇,甚至LLM也许根本都不需要我抬。

我曾经绞尽脑汁才发现的老板的bug,曾经的那些灵光一闪,对于LLM来说也许只需要合适的prompt或者Agent flow,然后就是几秒钟inference的事情。

我不知道怎么结尾,也不知道怎么和这个事实和解。人生的酸甜苦辣,真是有意思呐。

Circuit Fusion Read Note

Circuit Fusion

Basic idea: learn a fusion circuit embedding by fusing different modalities: RTL code, function description, netlist graph, RTL AST.

Data

41 RTL designs - 7,166 RTL sub-circuits - generating same function 57,328 sub-circuits (Each with 8 sub-circuits)

summary - coming from GPT4 (prompt input is the verilog code)

code - existing

graph - obtained by code. nodes are operators.

How circuit is divided into sub-circuits?

Training details

Intra-modal learning

- mask circuit graph nodes (operators, e.g., AND, XOR, MUX) and predict the masked nodes

- intra-modal contrastive learning: positive sample - sub-circuits with the same functionality

- Q: How to create same functionality and ensure it is correct? A: using open-source tools, Yosys and ABC

Cross-modal alignment

Multi-modal fusion

- Mask summary modeling

Query: Masked summary embedding (randomly masking parts of high-level summary)

Key, Value: Mix-up of code-graph

Q: why not simply mix-up everything and feed into a transformer (self-attention)

- Summary and mix-embedding matching

Alignment w. Netlist - RTL

What is RAG inference here?

Evaluation: what tasks?

- predicting slack for each individual register (sub-circuit level)

1 | slack calcuation example (thanks deepseek) |

- worst negative slack (WNS) prediction

- total negative slack (TNS) prediction

- power prediction

- area prediction

The four above is circuit level

Q: how to use sub-circuit emebeddings to do circuit level predictions? Concat? If concat, how to handle the number of sub-circuits that changes for each design? A: They add them up. And then concat with some design-level features (what is included in their exp), and then use a regression model.

Q: This compares with SNS v2, but it seems to not publish the code?

Note: in circuit-level tasks, they include design level features, e.g., number of different operator types. We need to figure out what features they use.

Autograder简单的中文教程

前段时间想给一个作业写一个autograder,翻遍网上发现没有中文教程,遂作此文。(也是给自己留个备份 方便之后直接看)

首先准备一个文件夹 autograder_folder。注意这个文件夹里的内容会被上传到服务器中的/autograder/source

第一步

创建setup.sh,这个是autograder server第一个执行的脚本,一般来说,你可以用来给autograder server装环境。例子:

1 | #!/usr/bin/env bash |

第二步

创建一个脚本run_autograder, 其中的内容大概就是

- 复制学生的submission(在

/autograder/submission里面)到某个你需要的地方 - 跑学生的submission

- grading

例子

1 | #!/usr/bin/env bash |

Grading?

grade需要两个文件,run_tests.py

1 | import unittest |

和 tests/test.py

下面是我的例子,主要看下每个func 的前缀,很好理解。

1 | import unittest |

最后

注意不要压缩autograder_folder 这个文件夹,

要选中autograder_folder里面的所有文件然后压缩

2024年六月的随笔

武侠和科研

最近通关了一款武侠游戏,逸剑风云决。我在steam上的评论有了快一百点赞:

我也算是个科研工作者,在我看来科研世界和武侠世界有很多相似之处。

有江湖 有师傅 有人情世故,每个组(门派)有自己擅长的领域。

做的最好的教授就像那些一代宗师们,而我们就是“初出茅庐”“炉火纯青”的小年轻。

而宇文逸的故事真的有给到我激励:不论处在什么位置,保持善良、保持初心,努力学习,坚持正义,作出一些为国为民的贡献,便是自己的侠心,每个人都能做到的侠心。

其实我年少时并没怎么读过金庸:在我那个年纪读“武侠小说”不算是“正经事”的。不过我有幸读完了成语故事,并且对里面的各种历史故事颇有兴致,也许我是那时起便埋藏了对自己这个民族、文化的热爱。

我真正接触武侠是到了大学。从侠客风云传到射雕英雄传,最开始的喜欢其实更多是因为它游戏中能带给人的“爽感”:主角获得绝世神功完成复仇,迎娶意中人,这样的故事是我这样的青年喜闻乐见的。

不知何时起,我喜欢的武侠不再是那些武林绝学、江湖奇遇,反而是郭靖守襄阳的义不容辞、黄蓉陪伴郭靖的生死相随。在我看来,这些品质,是超脱于那些江湖、绝学之上的,更为难得、并且更值得我们学习的。

也许是“有人的地方就有江湖”,另一方面让我觉得有意思的是,在这种武侠游戏中,经常会有 把我逗笑、让我觉得和自己的生活好像 的情况。

主角参加武林大会,和各路没见过的掌门帮主问好,掌门帮主们第一句话就是问你是不是xx(武当掌门)的关门弟子。

😆

这不就是我去DAC开会和各路大佬们打招呼,他们都会问我是哪个学校、导师是谁一模一样?

然后各个门派的招式就是各个组擅长的领域。有的组擅长phyiscal design,有的组用ML比较多。。。类比起来就是 少林打拳的多,姑苏家很喜欢用斗转星移之类的“妖术”。

那这么说我的SDP方法算不算自己发明了一门武功(虽然搁游戏里肯定就是一个白色最垃圾武功),但好歹也是个原创对不。😆

因此,我每每学一些新东西时、譬如RL,diffusion model,我总是莫名其妙会涌出一股兴奋感:卧槽,我可是在读一门武林绝学呢!这秘籍不用从猴子那拿、不用给神仙姐姐磕头,直接就给我了!

遗憾和人生

人生最大的遗憾,是一个人无法同时拥有青春和对青春的感受。

最近实习去公司都是博一顺路接送我,所以每趟我们都在路上嗨聊。

自然聊到了一些人生中遗憾的事。

如果真要聊,我可以哔哩吧啦一大堆:

“我跟你说,我本来是个天生的画家,小学时xxx。。。”

“诶,我小时候还自己做游戏,无敌的”

。。。

但真要说最大的遗憾,我也许会投自己成熟的太晚一票。因为成熟的太晚、所以很多对人对事,不论是对朋友、还是感情上,都有太多让我后悔做过或是没做的事。

我可以把一部分原因怪罪于这个高考至上的教育体系,这个忽视人文关怀的体系让包括我在内的学生没能在青少年时代建立起良好的感情观、人生观,只能空叹“初闻不知曲中意,再闻已是曲中人”。

但是我没法把锅全甩给体系。

事实上,我很认同deft的那句话

其实人生在世,是不太需要别人的建议的,不经历过不会明白的

语文课本里面学过的东西其实足够我们安然幸福的度过人生:我们学过对待外物要“不以物喜,不以己悲”、对待结果要“尽吾志也而不能至者,可以无悔矣”,也见识过“山无陵,江水为竭”的轰轰烈烈的爱情。

但是不经历过是不会明白的。

只有经历过,才会想:如果当时我XXX就好了。这么说来人生似乎是注定有遗憾的,但也正是如此,所以我现在坚定的支持探索,去体验,去勇敢的做自己的选择。只有多去做、才能多经历、才能真正的学到怎么和这个世界、和他人、和自己相处。

stabel diffusion 和 reinforcement learning 的优缺点对比

今天开完会 和同学仔细讨论了下SD 和 RL,受益颇多,赶快记录一下

Stable Diffusion

优点

- 一般质量很高。(当然SD is only for generative task now)

- 可以生成很多样本

- 训练稳定

PS: 这几点可都是EDA engineer喜欢的呀

缺点

需要“label” $x_0$

生成的样本 还是 在模仿”label”们的distribution,能不能超过label呢?这是个问题。如果不能超过“生成label的算法”的话,那么我们为什么不直接用“生成label的算法”?

关于这个问题多说几句,目前我看到的回答是。

- 没有“生成label的算法”,但我们也可以(反向)产生一堆100% accurate的label。比如我们的任务是 format A -> format B。虽然没有A->B的完美算法,但是有B->A的完美算法。于是我们random generate B, and then get its corresponding A. which is our training data (A,B)

- 没有算法,但可以很方便收集到label,比如SD用来生成图像。

Reinforcement Learning

优点

- 可用于各种问题,生成,决策,控制。

- 不需要“label”

缺点

- 训练很不稳定

- 怎么定义”很好的”action,reward,这些是个大问题

stable-baselines3的一些小技巧

Use gym wrapper

需要重写 reset() and step()

1 | class TimeLimitWrapper(gym.Wrapper): |

当然,如果自己写enviorment 就不需要 写wrapper了

logger 中的各种值

entropy_loss

entropy_loss: 负数值,越小代表预测的越不“自信”

if probs is [0.1,0.9]

entropy = -0.1ln(0.1) - 0.9ln(0.9) =~ 0.325

entropy_loss = -0.325

if probs is [0.5,0.5]

entropy = -0.5ln(0.5)-0.5ln(0.5) =~ 0.69

explained_variance

explained_variance, 用于计算how well value function (or critic in actor-critic methods) match the actual returns

~= 1: 完美

0: 和全预测一个值一样

<0: 比简单预测mean还差

RL Tricks I found in internet

http://joschu.net/docs/nuts-and-bolts.pdf

Premature drop in policy entropy ⇒ no learning

- Alleviate by using entropy bonus or KL penalty (鼓励randomness)

I was confused as to what action I should take to improve my results after lots of experimentation whether feature engineering, reward shaping, more training steps, or algo hyper-parameter tuning. From lots of experiments, first and foremost look at your reward function and validate that the reward value for a given episode is representative for what you actually want to achieve - it took a lot of iterations to finally get this somewhat right. If you’ve checked and double checked your reward function, move to feature engineering. In my case, I was able to quickly test with feature answers (e.g. data that included information the policy was suppose to figure out) to realize that my reward function was not executing like it should. To that point, start small and simple and validate while making small changes. Don’t waste your time hyper-parameter tuning while you are still in development of your environment, observation space, action space, and reward function. While hyper-parameters make a huge difference, it won’t correct a bad reward function. In my experience, hyper-parameter tuning was able to identify the parameters to get to a higher reward quicker but that didn’t necessarily generalize to a better training experience. I used the hyper-parameter tuning as a starting point and then tweaked things manually from there.

Lastly, how much do you need to train - the million dollar question. This is going to significantly vary from problem to problem, I found success when the algo was able to process through any given episode 60+ times. This is the factor of exploration. Some problems/environments need less exploration and others need more. The larger the observation space and the larger the action space, the more steps that are needed. For myself, I came up with this function needed_steps = number_distinct_episodes * envs * episode_length mentioned in #2 based on how many times I wanted a given episode executed. Because my problem is data analytics focused, it was easy to determine how many distinct episodes I had, and then just needed to evaluate how many times I needed/wanted a given episode explored. In other problems, there is no clear amount of distinct episodes and the rule of thumb that I followed was run for 1M steps and see how it goes, and then if I’m sure of everything else run for 5M steps, and then for 10M steps - though there are constraints on time and compute resources. I would also work in parallel in which I would make some change run a training job and then in a different environment make a different change and run another training job - this allowed me to validate changes pretty quickly of which path I wanted to go down killing jobs that I decided against without having to wait for it to finish - tmux was helpful for this.

- Comments to your last point: make more use of callbacks. https://stable-baselines3.readthedocs.io/en/master/guide/callbacks.html There are a lot of configured callbacks you can use to capture the best model, stop training on no improvement, regularly checkpoint… Lots of stuff. Be explicit about using Eval environment.

Untitled

穷乡僻壤,美丽乡村。乡党委书记是怎样使它蜕变?又用怎样的丹青勾勒出这如画风

景?

答道:以其信心,凭其决心,用其全心而已。

顿悟——人生如画,我以我心绘风景,绘出个草长莺飞,绘出个青山万丈,绘出一道

最亮丽的风景线!

我以信心绘风景,绘出一轮欲览的青天明月。五岁的她获得歌咏比赛一等奖,校长夸

奖道:“小姑娘,能拿冠军真是你的幸运。”她反抗道:“不,这是我应得的。”她是撒切

尔夫人,正是她的信心,使英国政坛多了一道迷人而又张扬的风景。

我凭决心绘风景,绘出一件已被黄沙打穿的金甲。乡党委书记凭着过人的决心建设乡

村,他成功了;孙权凭借“再有说者,便如此案”的决心,击败不可一世的曹操;邓艾凭

着“积军资之粮”的决心,以偏师平定蜀汉;项羽凭着破釜沉舟的决心,打败三十万秦

军……事例太多,但请君细想,若没有决心,曹操可能已一统天下,蜀汉仍能苟延残

喘,反秦势力可能烟消云散,那历史画卷中那些亮丽风景岂不消失殆尽?因此,我凭决

心绘风景,定要绘出“不破楼兰终不还”的豪情。

我用全心绘风景,绘出一派奋斗之花盛开的图景。乡党委书记用全心投入到乡村建

设,于是乡村焕然一新,而正值青春年少的我们,怎么有理由不全身心地投入到勾绘精

彩人生画卷之中去呢?但是,我看到了吊儿郎当的学生,我看到了沉迷灯红酒绿的青

年,他们的人生画卷黯淡无光。林清玄曾说:“我们要以全心来绽放,以花的姿态证明自

己的存在”。全心全意,只此四字,要做到却着实不易。孔明全身心地投入复兴汉室的伟

业中,即使失败也是一道风景;哈兰德•桑德斯退休后全心研究炸鸡方法,最终在街头巷

尾绘出一片“肯德鸡”的风景;科比全心练习篮球,在凌晨四点便开始训练,铸造了紫金

王朝那一抹风景。他们,或是儒生,或是老朽,或是“富二代”

,都全心投入各自坚守的

事业,亲手绘出一段段风景绚丽的人生卷轴,而我们又怎能轻易放弃?

每个人都有能力创造属于自己的风景,但需要信心、决心,更重要的是全心投入。如

此,蓦然回首,你会发现那风景已在灯火阑珊处。

我以我心绘风景,风景迷人因我心。

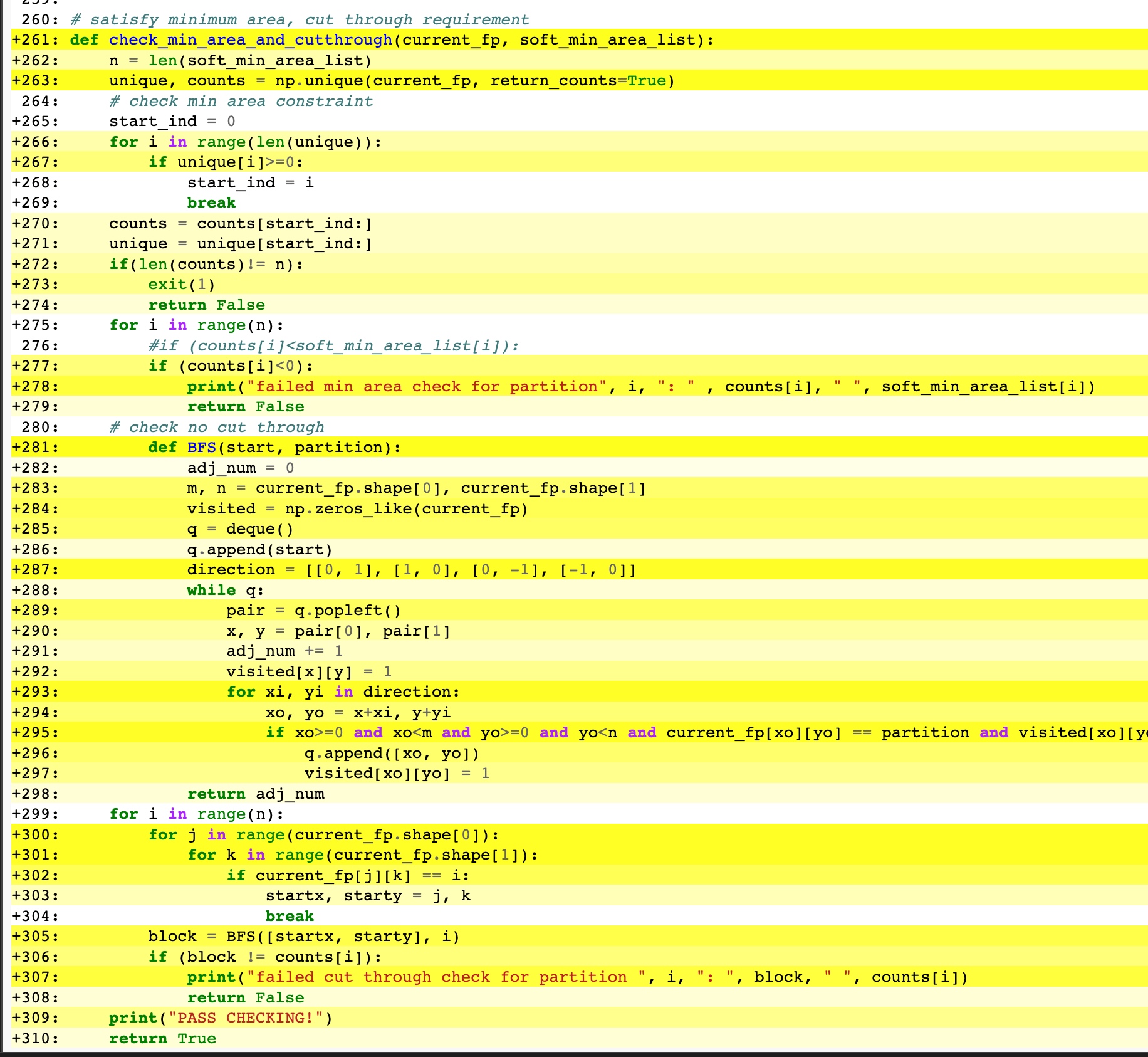

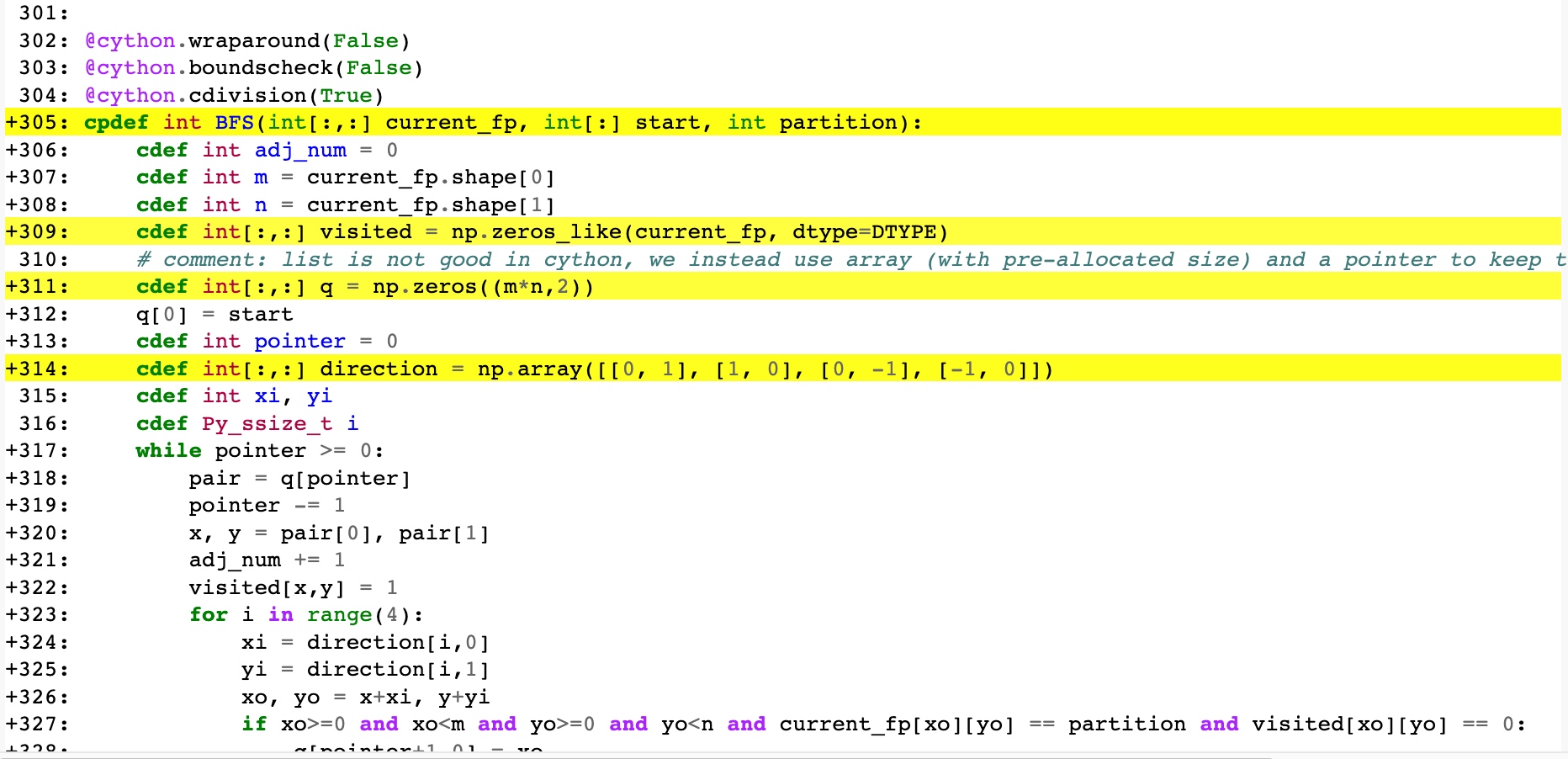

Cython的优化指南

很有用的资源

官方: https://cython.readthedocs.io/en/latest/src/userguide/numpy_tutorial.html

B站: https://www.bilibili.com/video/BV1NF411i74S/

基本使用

Cython可以在python中实现近似于C++的速度,基本方法是用类似于python的语法写.pyx 文件,然后再编译成python可以直接调用的library

编译需要一个setup.py

1 | # setup.py |

然后敲入入下代码就可以编译了

1 | python setup.py build_ext --inplace |

Cython还提供了很方便的visualization,可以看implementation里面有哪些是C++ 哪些是python

1 | cython -a target.pyx |

例子:

其中黄色越深,代表调用的Python API 越多,which means the efficiency is lower

怎么优化

最常用的一般是下面几个要点

- 在function前加上

@cython.wraparound(False) @cython.boundscheck(False) @cython.cdivision(True),避免python里面耗时间的boundscheck and so on (当然这样的话bound error就不会提示了,所以建议最后没bug了再加 - 标注function input和return 的type

- 标注所有要用到的variable 的type(包括numpy array 和 index,例如i j等) (就和c一样)

- 不要直接用numpy的api,要用就自己写

效果如下,可以看到最后loop全部都白啦!

其他小技巧 OpenMP 多线程加速

在.pyx 中,加入如下prefix

1 | # distutils: extra_compile_args=-fopenmp |

然后在想要并行计算的for loop里用prange, 就可以啦:

1 | # We use prange here. |

总结

如果有些实现起来很简单,但是python因为type determine, memory allocation, bound check等 各种原因导致效率很慢,可以试着用cython来加速。效果很显著,最主要的是很容易就可以integrate into python, which is a must-have for DL work.

2024二月的碎碎念

Hey Neway.

现在是2024年的二月底。

经历了一个冬天的“匡扶汉室”后,学期一开始,我忽然有了危机感。这种感觉很像高二时候的我:就是忽然觉得自己不能再这么玩乐下去了。

也许是因为游戏玩腻了。

也许是因为DAC又中了一篇对自己要求高了一些。

也许是因为算了算自己的毕业:忽然发现四年的话就只剩一年多一点了。

一直以来我对自己说的随遇而安、开心就好的“自我安慰”的体系,似乎土崩瓦解了。

就是忽然的,我开始想work,开始算自己的paper数。

从这点上,还是蛮羡慕高中的自己的。如果下定决心,只需要心无旁骛的学习,准备高考和自主招生就行。

但是成年人的世界却不像做题考试一样简单。这样的危机感不能让我专心工作,反而带来了很多焦虑。

我焦虑自己的德不配位。焦虑自己能不能四年毕业。焦虑毕业时找教职或者找工作有没有connection能帮到我,如果没有是不是得再去多找教授合作。

德不配位这种焦虑时有发生,获得intern mentor赏识的时候;被告知Apple 很喜欢我的talk 又给了我fellowship的时候;Jose每次夸我good job的时候。当然这些时候也伴随着开心,但是开心之后就是很久的压抑。我知道自己的不足,我是个拖延症,我很多VLSI的background都欠缺,我甚至没跑个任何一个commercial EDA的flow,大学之后我也没有哪门课是真的想高中一样 感觉学到很多东西,甚至能得心应手的(天文除外)。

不过还好,这些焦虑也没有很影响到我。我觉得得学习了,那么我只需要学就好了,用不着管前路如何。到了该conection谈合作的时候,去介绍自己发email就好了。就像小撒说的

“我觉得自己是自由的。我不给自己的明天设置任何目标。我完全让时间这条河流带着我往前走,我就像躺在河面上的孩子一样,河流到哪,我就到哪,我只看天上的白云,不看前面”

我经常会庆幸自己喜欢历史、也算“饱读过诗书”,所以这些压抑的负面的情绪,基本也都能消遣掉。竹杖芒鞋轻胜马,谁怕?一蓑烟雨任平生。

我很喜欢回过去看自己写的碎碎念。这真给了我一种穿越时空对话的感觉,同时很多时候也给了我无穷的精神力量。我想,未来某个时候的你,看到这篇文章,也会重温苏子当年给你的激励,也许也能帮你排解一些忧愁。

真好。