| Category | Base Model | Data | Open-source | Training cost | Problem | Metric | Experimental Conclusions | Other Remarks | |

|---|---|---|---|---|---|---|---|---|---|

| DATE23-BPAN, Benchmarking LLM for automated Verilog RTL Code Generation | Fine-tuning | CodeGen, 345M - 16B | Github: 50K files / ~ 300MB: Verilog Book: 100MB | Yes | CodeGen 2B: 1 epoch, two RTX8000(48GB), 2days; CodeGen 6B: 1 epoch, 4 RYX8000(48GB), 4 days; CodeGen 16B: 1 epoch, 3 A100, 6 days. | Code generation from HDLBits website | 1. Compiled completions; 2. functional pass | 1. Fine-tuning increase compiling completion rate significantly; (with 10 different completions) 2. Fine-tuning is still bad at functionality correctness of intermediate and advanced problems | LLM is only good at small scale/ light-weight task |

| ChipNemo | Fine-tuning | LLaMA2, 7B, 13B, 70B | Internal Data (Bug summary, Design source, Documentation, verification, other): ~22B tokens; Wiki: 1.5 B tokens [natural language]; Github: 0.7 B tokens, C++, Python, Verilog [code]. | No | 7B: 2710 A100 hours; 13B: 5100 A100 hours | Script Generation; Chatbot (88 practical questions in arch/design/verification); Bug summary and analysis | Mostly human rating | A lager lr. (3x10e-4 vs5x10e-6) degrades performance significantly | In most cases, 70B w.o. FT is better than 13B w. FT |

| ChatEDA | Fine-Tuning | LLaMA2, 70B | In-context learning (give example in prompt) gives 1500 instructions + proofreading. | No | 15 epochs, 8xA100 80GB, | task planning; Script generation | 1. the task planning is accurate? 2. the script generation is accurate? | Auto-gressive objective? | |

| RTL-Coder | Fine-Tuning | Mistra-7B-v0.1 | 27k+ samples (pair of problem + RTL code ) | Yes | 4 RTX4090 | RTL code generation | VerilogEval + RTLLM | The new training scheme seems to be very effective; Using generation method in RTLLM, the function correctness even reaches 60% for GPT4 |

我和三国的故事

初中时我的历史和地理特别好,好到基本没有下过一百分。很大一部分原因是因为兴趣吧。至今我都还清楚记得一个画面:周末午后的暖阳下,我躺在躺椅上惬意的读着各种历史地理的科普书、小说。

其中便有三国演义。不过当时稚嫩的我,只是纯粹把它当作一本“描绘英雄的故事书”。当时的我喜欢是无所不能的诸葛亮、是七进七出的赵子龙、是一个又一个豪迈的英雄故事。就像对迪迦的迷恋一般,我和大多数少年一样,是崇拜并且深信这些英雄的:无论何时,他们都会像光一样,而这个世界,也会无偿的相信支持他们的英雄,最终,英雄会打败邪恶,拯救这个世界。

也许是从大学开始,慢慢的需要接触社会、面对除考试外的压力、承担作为成年人的责任,我认识的似乎更深刻无情了一些。这个世界上没有奥特曼也没有英雄,只有努力却不一定成功的人。诸葛亮不会呼风唤雨、子龙也没有七进七出(当然他确实在长坂坡救了阿斗)。这些“英雄”们的生活,其实也充满着一地鸡毛:几万士兵粮食哪里来,拉屎拉哪里,新兵蛋子去偷橘子了怎么办,家里老婆孩子又生病了怎么办。。。

但反而如此,我却更加喜欢蜀汉的这群人了。

读到司马懿的“洛水之约”,我越发倾佩丞相身居高位却真正做到了“鞠躬尽瘁死而后已”。

看到曹操每过屠城,我也更喜欢那个携民渡江“吾不忍也”的刘玄德。

只有自己在人生选择中做了太多次的“精致利己者”,才会感慨姜维“可使汉室幽而复明”那“明知不可为而为之”的胆量与魄力。

我相信权力场不只有尔虞我诈;我相信即便成功渺茫,我们也可以尽力一试;我相信人际关系不是只讲利益。我相信这些,不是因为我自己的感同身受,不是因为课堂上学到了这些,而是因为我知道,一千八百年前,有这么一群理想主义者,无论成功与否,他们真的是这么做的。

几千年的滚滚长河,无数王侯将相化为尘土,我只感到何其有幸,能读到他们的故事。每每失意之时,他们便在我心中似乎真化作了光,指引我前进的方向,并激励着我一路前行。

DAC23之后的一些碎碎念

Hey Neway, 现在是加州的凌晨0点22分,度过了一周的DAC,本来应该困顿的我现在特别清醒。所以干脆还是写点什么记录一下这次DAC。

该死的疫情总算死了,我也得以见到好多在香港时的好友。有些人似乎没有变、有些人却经历了一些难过和挫折。每当有这些负面经历时,我都会安慰自己:就当是一种人生新体验好了。但是对他们我却不太会拿这个原因来安慰他们,我只是和他们一样觉得难过、觉得惋惜,同时也觉得人生的残酷:它永远这么长河般的向前流、而平凡的我们却很难做那个掌舵手、只能被岁月推着往任意方向前行。

很擅长optimization的我们,貌似很难optimize我们自己的人生,哪怕只是一点点。

说回这次DAC吧。Presen的时候感觉自己还是因为紧张 说的太快了,之前在Apple的时候Mark就给我建议presen前深呼吸、注意自己的语速。结果我只记得上半句,诶。不过除了说了快一点、公式放的有点多之外,其他的似乎一切都好,我也不知道什么时候,好像有了《哪怕紧张 也会“看起来”很自信》的天赋,挺好的。确实得自信一点,这是自己的work,自己几个月的心血,自己都没底气的话,那也太对不起work的那个自己了吧。

线下的这种会议对于开拓眼界真挺有用的。感觉这几天看到的EDA、PD比我过去一年看到的都多:从前一直都像是盲人摸象般,而在这能去到不同的session、能了解各个组最新的work,这种机会不是arxiv、google scholar看paper能比拟的。虽然无法避免的会有一些peer pressure,但是更多的还是感悟、学习、和收获吧。

BTW。这次还见到了许多paper里才看得到的老师,真有点像小虾米第一次参加武林大会时见各个门派的掌门的感觉哈哈哈哈。从这个角度看学术圈还真挺像武侠世界的。那些很有影响力的paper时降龙十八掌、六脉神剑、而我发的paper暂时还只是“花拳绣腿”、“王八拳”,哈哈。(那去公司是不是就是去镖局之类的上班 XD

每次写这种文章的时候,我都会打开Hey Kong单曲循环。我也好想知道未来的你在做什么,想听到未来的你对我说的话。但不管怎样,希望你和大家都能一切如意。

德州的记忆

Mark夸我做得好

| Original | Correction | Reasoning |

|---|---|---|

| Central to my research interests are optimization and GPU-accelerated methods in VLSI design and test, as well as geometric deep learning and its applications in EDA. | My primary research interests lie in optimization, GPU-accelerated techniques in VLSI design and testing, alongside geometric deep learning and its applications in Electronic Design Automation (EDA). | Clarification and enhanced readability. |

| My research endeavors have culminated in over a dozen publications, including three best-paper awards. | My research efforts have resulted in over twelve publications, including three papers recognized as best in their respective categories. | Improved sentence structure for clarity. |

| Chiplets, exotic packages, 2.5D, 3D, mechanical and thermal concerns, gate all around, …, make the already-hard problem of IC design that much more challenging. | The incorporation of chiplets, exotic packages, 2.5D, 3D integration, as well as mechanical and thermal considerations, alongside emerging technologies like gate-all-around (GAA), exacerbate the already complex nature of IC design. | Enhanced clarity and precision. |

| Existing CPU-based approaches for design, analysis, and optimization are running out of steam, and simply migrating to GPU enhancements is not sufficient for keeping pace. | Traditional CPU-based approaches for design, analysis, and optimization are becoming inadequate, and a mere transition to GPU enhancements is insufficient to maintain pace. | Improved phrasing for academic style. |

| New ways of solving both existing and emerging problems are therefore desperately needed. I am very much attracted to these types of challenges and take pleasure in generating solutions that exceed old techniques by orders of magnitude. | There is an urgent need for innovative solutions to address both existing and emerging challenges. I am particularly drawn to these types of problems and find satisfaction in devising solutions that surpass previous methods by significant margins. | Strengthened expression of urgency and motivation. |

| In collaboration with Stanford University, my lab at CMU developed a new testing approach for eliminating defects that led to silent data errors in large compute enterprises \cite{li2022pepr}. Our approach involved analyzing both the physical layout and the logic netlist to identify single- or multi-output sub-circuits. The method is entirely infeasible without using GPUs, which allows us to extract more than 12 billion sub-circuits in less than an hour using an 8-GPU machine. In contrast, a CPU-based implementation required a runtime exceeding 150 hours. | In collaboration with Stanford University, my lab at CMU devised a novel testing methodology to rectify defects responsible for silent data errors in extensive compute infrastructures \cite{li2022pepr}. Our approach entailed scrutinizing both the physical layout and logic netlist to pinpoint single or multi-output sub-circuits. This method is entirely unattainable without the use of GPUs, enabling us to extract over 12 billion sub-circuits in under an hour using an 8-GPU system. In contrast, a CPU-based implementation demanded a runtime exceeding 150 hours. | Enhanced clarity and precision. |

| My summer intern project concerning global routing at NVIDIA\footnote{Submitted to IEEE/ACM Proceedings Design, Automation and Test in Europe, 2024} is another example to demonstrate. Specifically, traditional CPU-based global routing algorithms mostly route nets sequentially. However, with the support of GPUs, we proposed and demonstrated a novel differentiable global router that enables concurrent optimization of millions of nets. | My summer internship project focused on global routing at NVIDIA\footnote{Submitted to IEEE/ACM Proceedings Design, Automation and Test in Europe, 2024} serves as another illustration. Conventional CPU-based global routing algorithms predominantly route nets sequentially. Nonetheless, with the aid of GPUs, we introduced and demonstrated an innovative differentiable global router that facilitates simultaneous optimization of millions of nets. | Improved phrasing for academic style. |

| Motivated by my intern project at Apple in 2022. Unlike traditional floorplanning algorithms, which heavily relied on carefully designed data structure and heuristic cost function, I first proposed a Semi-definite programming-based method for initial floorplanning, which is a totally new method and outperforms previous methods significantly \cite{10247967}. Furthermore, I designed a novel differentiable floorplanning algorithm with the support of GPU, which is also the pioneering work that pixelized the floorplanning problem. | Inspired by my internship project at Apple in 2022, I introduced a fundamentally new approach to initial floorplanning. In contrast to conventional methods, which heavily depend on intricately crafted data structures and heuristic cost functions, I advocated for a Semi-definite programming-based approach that exhibits superior performance \cite{10247967}. Additionally, I devised an innovative differentiable floorplanning algorithm with GPU support, marking a pioneering effort in pixelizing the floorplanning problem. | Enhanced clarity and precision. |

| While Artificial Intelligence (AI) has witnessed resounding triumphs across diverse domains—from Convolutional Neural Networks revolutionizing Computer Vision to Transformers reshaping Natural Language Processing, culminating in Large Language Models propelling Artificial General Intelligence (AGI)—its impact on the IC domain has been somewhat less revolutionary than anticipated. | Although Artificial Intelligence (AI) has achieved remarkable success in various domains—from the revolutionizing effects of Convolutional Neural Networks on Computer Vision to the transformative impact of Transformers on Natural Language Processing, culminating in Large Language Models propelling Artificial General Intelligence (AGI)—its influence on the IC domain has been somewhat less groundbreaking than anticipated. | Strengthened expression and enhanced precision. |

| This can be attributed, in part, to the irregular nature of elements within the VLSI workflow. Notably, both logic netlists and Register Transfer Level (RTL) designs inherently lend themselves to representation as hyper-graphs. Moreover, the connectivity matrix among blocks, modules, and IP-cores is aptly described by a directed graph. Unlike the regularity found in images or textual constructs, the application of AI to glean insights from such irregular data remains an ongoing inquiry. | This can be attributed, at least in part, to the irregular nature of components within the VLSI workflow. Notably, both logic netlists and Register Transfer Level (RTL) designs inherently lend themselves to representation as hyper-graphs. Furthermore, the connectivity matrix among blocks, modules, and IP-cores is aptly described by a directed graph. In contrast to the regularity found in images or textual constructs, the application of AI to extract insights from such irregular data remains an ongoing area of exploration. | Enhanced clarity and precision. |

| My prior investigations into layout decomposition \cite{li2020adaptive} and routing tree construction \cite{li2021treenet} vividly underscore the immense potential and efficacy of geometric learning-based methodologies in tackling IC challenges. | My previous studies on layout decomposition \cite{li2020adaptive} and routing tree construction \cite{li2021treenet} strongly emphasize the significant potential and effectiveness of geometric learning-based approaches in addressing IC challenges. | Improved phrasing for academic style. |

| A recent contribution \cite{li2023char} of mine delves into the theoretical boundaries of Graph Neural Networks (GNNs) in representing logic netlists. Drawing upon these foundations and experiences, my overarching research ambition in my PhD trajectory is to develop the Large nelist model to capture the function information of logic netlist |

2023年六月的随笔

Install PyG under Arm Mac

- Install nightly pytorch:

1

pip3 install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cpu

- Install these packages from the source:

1

2

3

4

5pip install --no-cache-dir torch==1.13.0 torchvision torchaudio

pip install git+https://github.com/rusty1s/pytorch_sparse.git

pip install git+https://github.com/rusty1s/pytorch_scatter.git

pip install git+https://github.com/rusty1s/pytorch_cluster.git

pip --no-cache-dir install torch-geometric

Advantages of ReLU

STRATEGIES FOR PRE-TRAINING GRAPH NEURAL NETWORS

Motivation: Naïve pre-training strategies (graph-level multi-task supervised pre-training) can lead to negative transfer on many downstream tasks.

Pre-train Method

NODE: Context prediction:探索graph structure information

context graph: for each node, the subgraph between $r_1$-hop and $r_2$-hop.

context anchor nodes: 假设GNN是r-hop,context anchor nodes就说context graph和r-hop overlap的点。(也就是r1 到 r 之间的点)

- 获取context embedding

- 用GNN获取context graph 上的node embedding

- average embeddings of context anchor nodes to obtain a fixed-length context embedding.

- context embedding和对应的r-hop 的node embeeding的inner product接近1 (prior that the two embeddings must be similar when they come from the same neighborhood)

NODE (also applies to GRAPH level): Mask attributes and predict them

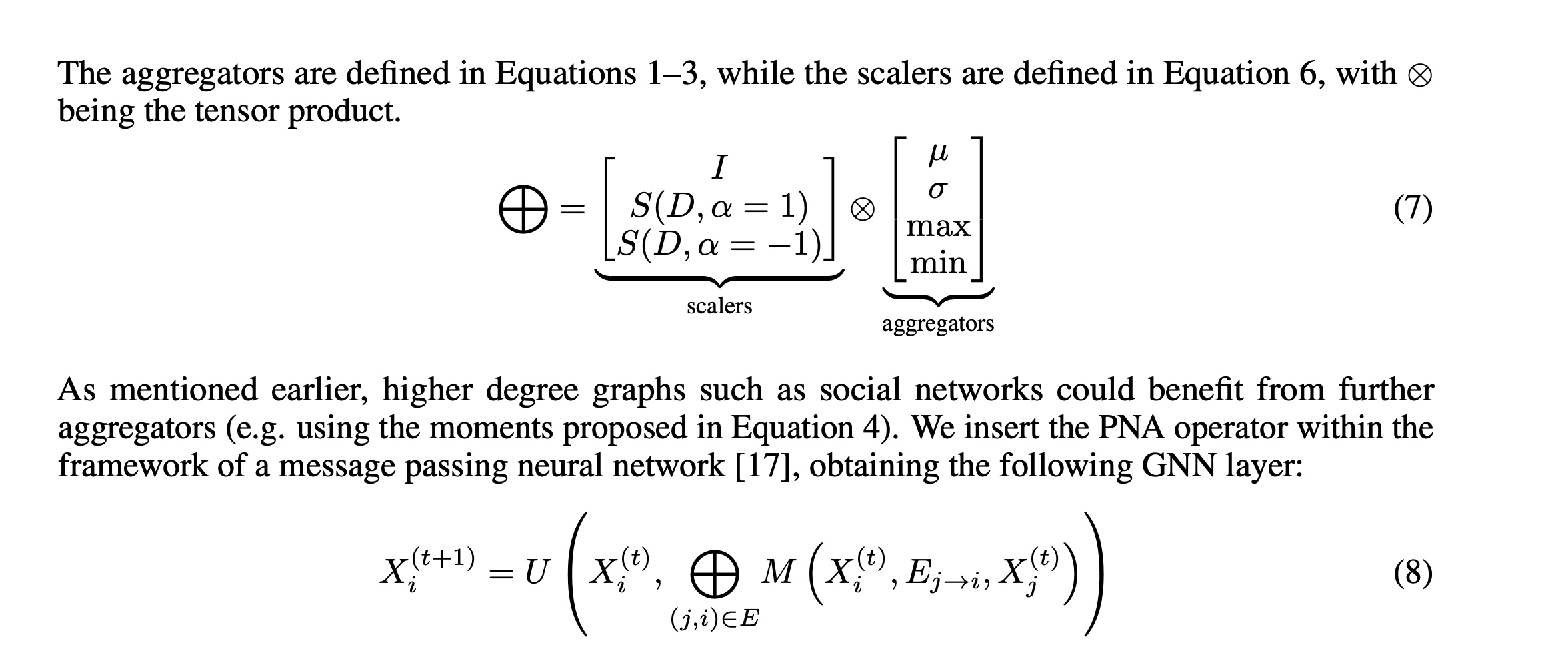

Principal Neighbourhood Aggregation for Graph Nets

单个aggregator不行,需要combine多种不同aggregator

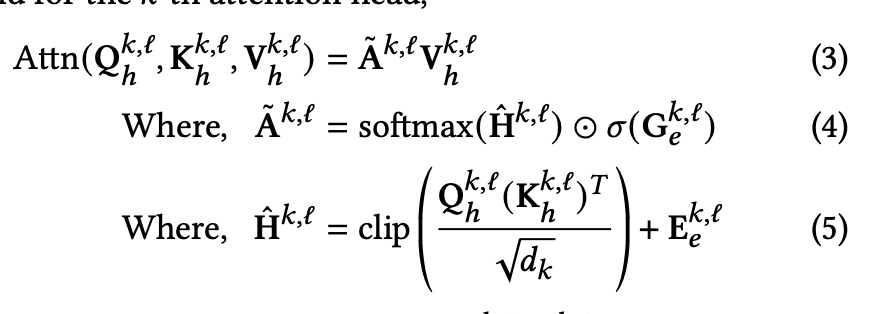

这里的S 是基于degree的一个额外系数:

alpha pos时,node的degree(d)越大,S值就越大,于是feature值越大。(amplification)

反之则为 attenuation

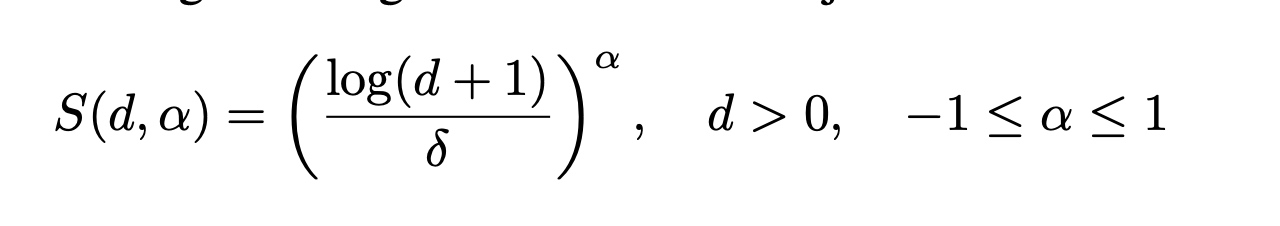

Global Self-Attention as a Replacement for Graph Convolution

The basic idea is, rather than pre-defined functionality of edges, which introduce inductive bias. Let transformer learns it by itself.

here, edge information used in:

- $G$ is linear projection from edge embeedings. Will be a gate contriolling the attention. (Eq 4)

- $E$ is a linear projection from edge embeddings. Directly influence attention. (Eq 5)

- $E$ SVD on adj mat A, and use left and right singular vectors as positional encoding.

继承与叛逆

引言

科学是一个继承与积累的过程,强如牛顿世不是一个人发展动力学、一个人收集整理数据!(Cohen 62) ,然而从牛顿运动定律到达尔文的自然选择学说,再到爱因斯坦的相对论原理 ,每次新理论的出现现往往伴随随对前人科学的否定。

本文将从探讨几位科学巨匠的继承与叛逆入手,分析继承与叛逆在科学的发展中所扮演的角色,从而思考其能给我们带来的启示。

科学巨匠们的继承与叛逆

牛顿

谈到科学史,牛顿是绕不开的话题。牛顿《自然哲学中的数学原理》《以下称《原理》)的问世是整个物理学史中最伟大的事件之一(Cohen 49),而牛顿也被公认为将科学与宗教分离的现代科学开创者(陈方正 595)。在后人看来,对于那个以亚里士多德体系为科学标准的时代,牛顿可以说是以叛逆者的形象出现的。而这点恐怕是牛顿万万没想到的,事实上,无论是在宗教层面曾用天体运动证明神的存在(Cohen 55)还是在数学层面对于古典几何学的发扬,牛顿都是一个古代传统的继承者而非叛逆者,这也与其本人想法一致(陈方正595)。

牛顿的自诩不无道理。现代科学革命是由古希腊科学的复兴所触发(陈方正628),牛顿的科学体系也有许多是继承了古希腊科学思维。牛顿在无数例子中提炼出了惯性定律,而古希腊科学也重视演绎和归纳,譬如试图将地、海、火、风这些常见的自然现象感景观归纳为构成世界的四种元素(Lindberg 27)。《原理》对定理的证明逻辑严密,对数学的运用也十分巧妙,这些也正符合古希腊科学的特征(Thomas 89)。

但仅将牛顿称为继承者又显不妥,牛顿的许多贡献,如微积分、实验科学是超出了前人的研究程度甚至理解能力的,而这些贡献又一定程度上颠覆了前人的科学体系或是思维方式,这也是牛顿会被大众视为“叛逆者”的原因。但我认为,牛顿的最好定义应当是继承者、发展者和叛逆者。一方面,牛顿继承了前人部分思维方式、吸收了同时代其他科学家们的实验或是理论结晶,最后发展出将数学、观测、思想三者系统的结合起来的崭新哲学(余英时),而这门哲学反过来颠覆了传统理论,即叛逆一说。

达尔文

作为另一个叛逆者的杰出代表,达尔文的叛逆显而易见,他认为自然选择使产生有利变异的个体有更好的机会生存并将这个有利变异遗传下去,而有利变异的逐步积累则产生了物种进化,后来便出现了人类这样的高等生物(Darwin 95),这对上帝造人说无疑是巨大的挑战。

事实上,达尔文不仅是个叛逆者。他分别吸收了莱尔地质缓慢变化理论,马尔萨斯的资源有限导致生存竞争的概念·拉马克的特征可传理论,并将三者结合起来,再联系自己的实验及理论最终形成了自然选择学说。

坎德尔

作为当代科学家的杰出代表,坎德尔的研究历程更值得深思。他不是以“叛逆者的形象出现,而是一位优秀的合作者,继承者。

在探索意识的生物学基础这个尚未有人“开发”的难题时,他的导师建议他从下而上研究神经细胞的内部世界进而探索更上层的心理结构理论(Kapde1180),而在探索负责意识统一性的分布位置时巨匠克里克给了他关于屏状核的启示(Kandel 190)。可见人类的知识已不可能由一个人来全方位的扩展,当代科学中多人合作已不可避免。

分析

如上,达尔文的历程与牛顿都经历了吸收他人理论、发展自己理论、推翻前人理论的过程。但两人本意并不是叛逆,他们只是在发展自己的理论后发现与传统理论矛盾,于是选择成为叛逆者推翻原有理论。

用一个比喻来说明继承、叛逆与发展在科学中的角色:将科学比作一张试卷,继承就像试卷前面的基础题,你只需学习前人的理论便可,而发展就像是后面没有标准答案的难题,你得推导出更深层次的理论才可能完成。叛逆的一部分是你推导理论时跳出前人知识圈子的能力,而当你做完后面的难题,得到了自己的理论,发现基础题根本不能按从前的方法做时,你冒着 0分的风险把基础题全改掉的勇气就是叛逆的另一部分。

至于当代高层次的科学,就好像是几个人一起做一张只有难题的试卷,大家各抒已见,互帮互助,互相吸收时方的思路,这是发展。而一张卷子用了好几代人的时间来做,后人看前人的思路来继续解题,这是传承。当后人发现的人的思路有问题一笔涂掉,这是叛逆。当然,最后卷子做完,这张卷子的题目以后就变成基础题等着后人来做了。

启示

对于我们学生来说,继承无疑是容易的,但发展则困难些,它需要我们去探索未知的可能,而叛逆则更显困难,因为我们首先需要一套严密的体系去推翻它,这需要有足够的知识,而学习时我们又得小心不要陷在原体系的思维局限中,这无疑更为困难。因此在学习时锻炼自己的思辨能力以及敢于犯错的能力是及其重要的。

但同时,我们也不能盲目叛逆·不顾前人已得到的经验而盲目的追求标新立异、妄图空想出一个改变整个科学历史的重大发现自然是不可取的。从牛顿到达尔文,没有一个不是在学习、实验无数小时、自成了一套严谨科学的体系之后才开始叛逆,尚不能行,焉能跑否?这在当代的科学环境中尤为重要。

相对叛逆,我们更应思考如何在继承的基础上发展知识。细说,我们在将目前的课程掌握的基础上可以把握大学广淘的资源,去深入探索更高层次的问题,广说,谁也不能断言爱因斯坦构建的物理大厚不会崩塌,但我们能确信的是科学将会一直发展,我们能做的就是学到人类知识边缘,然后以团队之力为科学开疆扩土,并将这份志愿传承下去。

总结

克里克说,如果他可以活得久一些,他就要做一个实验确认当某个刺激进入意识知觉时,屏状核是否被激活了。他没有完成,但他的思路给了后人启示,于是坎德尔完成了。这种科学家之间的传承,何尝不令人动容?

历史上那些对科学的发展做了贡献的科学家们,就如已演变成中子星但仍在浩静夜空中闪耀着光芒的恒星的“鬼”,即使已经消亡,但星光一直在照耀我们。

若我们在黑夜里寻求真理时能有一缕星光相伴,即使可能会走弯路,总比闭着眼往前走来得可靠得多。而当我们走到一片完全没有星光的黑夜之中时,我们大可以毫无顾忌的摸索前进,因为终究有一天,会有一个执着着摸索前进着的人,成为这片黑夜上的星光给之后前来探路的人照亮前路的方向。

未来,当你再次仰望星空,看到这些可能几百万年前便已逝去但仍将光辉抚在你脸上的繁星,你想到亚里士多德,想到牛顿,想到达尔文,斯人已逝,科学犹存,这也许就是科学的魅力所在。

而我们擎路蓝缕,如坎德尔继承克里克一般,头顶星光脚踏实地的不断探索先人未曾或未能触及的领域,这也许就是对继承与叛逆最好的注解。

这篇文章作于2015.04,是我与自然对话的Final paper。转眼便是八年,我也真的在做一些算是“新东西”的科研,每每再读,都思绪万千。希望自己的赤子之心不要随着时间磨灭,希望自己能真的脚踏实地,有幸也能成为漫漫黑夜中的那一束星光。

二月的一些想法和有意思的事(2023版)

写完这个文章 发现自己的文学功底退化的十分明显。但又能怎么办呢,时间把我的才华偷走了。

关于这篇floorplan的那些事

这个work的整个过程真有点哲学意味:

本来想的是投一篇apple做的work,结果apple不给数据,

反而是在那个work中发现了一个子问题自己捣鼓出了这个work;

捣鼓完paper结果因为系统钓鱼执法不能提交了,

其实那时候心里已经想着烂掉了,就这样吧,但不知道为什么还是坚持“骚扰”了chair两三次,最后还是交上去了。

人生哪,永远不知道下一粒巧克力是什么味道,但是如果不去尝一下,那就永远都不知道下一粒没准是提交成功并且accepted的“惊喜”呢。

就像Jose说的

Fantastic. Persistence wins.

尽吾志也而不能至者,可以无悔矣。“可以无悔”重要,“尽吾志也”也很重要。

paper的规划

来CMU前,我定下了一个看起来很完美的五年计划

- 每年 中 一篇paper

- 每学期 投 一篇新paper

计划有条不紊的进行,现在也是达标的状态。但我忽然发现一个问题:

如果每年中一篇,但是每学期投一篇,那岂不是意味着最后我会有一半的投稿都跟我的coloring一样去arxiv了?

emmm。。。

方向

认识了一些人、投了这么些paper后,发现方向实在是太重要了。

虽然其实不同方向需要的数学方法都大同小异,但是

发paper、找教职、拉funding看的不是你数学能力、实现能力、思维能力,还得看你的方向match不match。

说到这个我就头大了。

之前说过,感觉自己只是一个天马行空的铺水泥的,缺少一个建筑师该有的大局观。

也许我需要多学习、多思考、多交流、多聆听,才能找到那个方向吧。

三国志14

之前沉迷三国志14的时候,因为对游戏中的数值设定不满,于是自学了CE,然后自己调了一些数值,并且发到了网上。

最开始一两天没有掀起什么波澜,甚至连回复的也没有。我本来挺失落的:第一次游戏编程 就这么结束了,一点水花都没掀起。

结果过了半年多,那个帖子火起来了,下面一堆“大神”、“大佬“的留言差点没让我迷失自我。

甚至还有一个初中生通过手机号加到我的微信,出钱让我帮他改数值,哈哈。

每次疲倦重复每日工作、会议的时候,想到这些,才会有种自己是真的在生活的感觉。真好呐。

我记得

这是我最近单曲循环的一首歌。

本来只是觉得曲子好听,直到听到这句词:

在星空另一端 思念从未停止 如同墓碑上的名字

然后就没法阻止的想到了高武。

想到当年他给我小姨发短信说我心情不好让小姨多照顾我;

想到他瞒着我偷偷给我妈汇报我最近的心情、学习;

想到高三最后两个月和他在被子里一起偷听他喜欢的电台;

想到大学后他在qq上给我的留言:李巍,你最近过的怎么样;

想到知道他去世时候我哭到站不起身;

想到去他家里他爸孤身一人家徒四壁的情境。

也许另一个时空,我仍和他互为挚友,回国时也会去找他把酒言欢。

但是我这个时空他永远的离开了,人世间这么精彩,但是高武已经只剩一个墓碑,永远孤零零的在那大山里了。

不过我相信,就像三体中说的,刻在石头上的信息往往比现代科技存储的更久。

高武的墓碑肯定会一直在那,就像我对他的思念一样。