For More information on GNN, please refer to here

General GNN

No normalization:

$$ {w}

H^{(k+1)} = activation((A+I)H^kW) \A = adjacent \ matrix \H^0 = features \ of \ graph \ nodes \W = trainable \ variables

$$

GCN can be also explained in a message-passing way where the intermediate representations can be viewed as messages.

The aggregation is the actual message-passing phase and each node passes its message to its neighbors along the edge ($X = (A+I)H^k$).

The encoder is served as the integration phase, in which each node integrates received the message and reduces it into its new message ($activation(XW)$).

Each message-pass and integration phase formulate one GCN layer.

The representation after the final layer is called the node embedding of each node and the graph embedding by GCN is usually obtained by a summation or mean operation using node embeddings.

How about HetGNN?

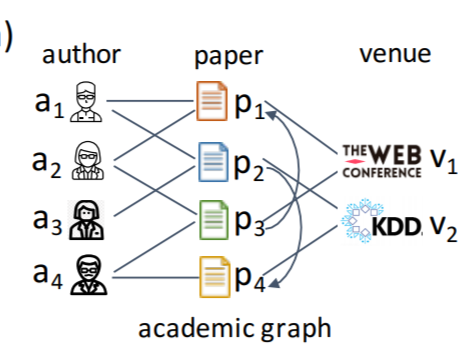

What is Het Graph?

Multiple kinds of edges, multiple kinds of nodes.

meta-path

- composed of edge types. e.t. author-paper-author

- meta-path indicates some sematic information. e.t. author-paper-author = co-author relationship/ paper contribution relationship

- multiple meta-paths (actually exponential to edge types ). (author-paper,author-paper-author,author-paper-venue,author-paper-venue-paper-author…)

IF we use general GNN to Het Graph…

- How to decide $A$, the adjacent matrix (there are multiple adjacent matrices for multiple types of edges)

- How to decide $H^0$, the input features of graph nodes. Different kinds of nodes have different kinds of features.

Also, there are some special points to be noted in Het Graph

- The number of direct neighbors are significantly unbalanced in some cases. (academic graph for example)

- The format of input feature may be different ( image, video, text… )

How to solve the problem?

Some more/modified steps are introduced:

[new] Reduce input content to vector feature

[modified] Decide neighbors (proper adjacent matrix $A$) to aggregate and aggregate neighbor features by different types (node type/edge type/meta path type)

[modified] encode new features by aggregated neighbor features

[new] encode new node feature by the generated new features of different types

| Input | Output | RGCN | HetGNN(KDD 19) | HetGAN(WWW 19) | GTN(NIPS 19) | General GCN | |

|---|---|---|---|---|---|---|---|

| [new] Reduce input content to vector feature of node | Input contents of each node (figs, texts…) | vector feature of each node | NA | contents->content features->node features(by biLSTM) | NA | NA | NA |

| [modified] Decide neighbors (proper adjacent matrix $A$) to aggregate and aggregate neighbor features by different types (node type/edge type/meta path type) | the graph | for each node $v$, tell him which nodes should be selected to receive there messages? | Direct neighbor, | select type-based neighbors with fixed size by random walk, | Manually selected meta-path neighbors | attention based meta-path(meta path is represented as a weighed multiplication of different edge type’s $A$) | Direct neighbor, |

| [modified] encode new features by aggregated neighbor features | Received messages of different type. | Encoded message of corresponding type | linear transformation | BiLSTM | attention sum with activation | linear transformation | linear transformation |

| [new] encode new node feature by the generated new features of different types | Encoded messages of different types | Final new feature(message) of this node $v$ | sum | attention sum | attention sum with activation | concat | NA |

| neighbor type | NA | NA | edge type | node type | meta path type | meta path type | NA |

Performance comparison

- [new] Reduce input content to vector feature

- biLSTM(0.67) > fc (0.65) [HetGNN]

- [modified] Decide neighbors (proper adjacent matrix $A$) to aggregate and aggregate neighbor features by different types (node type/edge type/meta path type)

- [modified] encode new features by aggregated neighbor features

- [new] encode new node feature by the generated new features of different types

- attention sum(0.768) > fc (0.763) [HetGNN]

- One more attention is always better… (icluding GTN)